I ran through 4 models already and nothing seems to be working. I can only learn 3 patterns out of 4 at one single time. The fact that same problem shows up even when I tried to correct it 4 times already makes me believe there must be something else that is actually not right, something that I’m not aware of… And the 4 models are not just changing some numbers each model has a different ways of forming and breaking synapses, different rules. Last model is the most complex allowing for every conceivable permutation and still gets stuck in timing issues. I still have couple of more ideas to try out, but not many, I’ll be running out of ideas soon… And no idea is earth shattering … so I don’t have much hope that it will solve the problem… Still my main problem remains that models are too complex and I can’t predict what should happen, there are always unintended consequences. So either I’m going to make an unexpected progress soon or I’ll stop working on this project…

What is learning ?

This seems like a simple question for a ML algorithm .. train some variables to some values and the combination uniquely identifies something. But those variables can also change when something new is learned and you lose the initial meaning..

From my previous post, I concluded that learning must be somewhat random, but I was not happy with that conclusion. After all we can all tell a circle is a circle, so it can’t be that random. So I eventually came up with a middle ground theory.. Assume 4 patterns with equal probability, there has to be a network configuration that would be stable and have all 4 patterns “memorized” as long as no additional information (additional patterns) are entering the network. I managed to prove there are such states in a 3 then 4 neurons matrix. But in a 4 neuron matrix there is more than one such stable configuration and also there are also states that are stable but not specific, meaning a neuron will learn 2 patterns and another one will learn the remaining 2 patterns in a symmetrical configuration. That was to be expected but still depressing 🙁 . So the new theory is simplistic and incomplete, but I’m sure is the basis for “learning”. Still have to find a way to make learning more stable (perhaps permanent through additional synaptic variable).

I’ve also started treating the neuron more like an atom with electrons, the electrons being the synapses. So I’m actually moving away from the “fitting” hypothesis (and synaptic strength). This theory lead me to believe that there must be “empty” places on a dendrite where there is no synapse, but a synapse could have been there, a forbidden energetic location, used to separate related patterns. So all synapses have defined energies that can be perturbed by input data, but perturbation will still lead to a defined configuration or will just get back to initial state with no change. I just don’t see how a synapse could be a continuous function.

In conclusion I’m still far far away from any meaningful progress 🙁

What is true for a neuron ?

Whatever method we use to extract meaning from data, truth seems to be elusive. Assume two event A and B are 90% correlated, meaning in 90 % of the time A precedes B. Is there a cause and effect between A and B ? What does it mean “precede” ? Day precedes night and summer precedes fall. So without a time frame, we cannot correlate event A and B. Assume the correlation is 100%. Does that mean cause and effect relationship ? We don’t know, maybe we did not pool enough data, or the time frame is wrong…

How is this related to my neuron ? I can’t decide when events are correlated or not, therefore I cannot move the data upwards in the next layer for further processing. How is this problem solved in the biological neuron ? I inferred from the 1960, cat experiment of Torsten Wiesel, that synaptic plasticity must stop in the lower layers of the primary visual cortex , and must stop rather early in life. I also assumed that the same process must happen on upper layers as well, but within a longer time frame. I also found a recent article (Rejuvenating Mouse Brains …) talking about a perineuronal net, which prevents neurons from forming new synapses. This tells me that we decide rather randomly about what truth is, base on available data, up to some time period, and the subsequent data is classified base on this old data, that cannot be changed anymore (or perhaps if extremely difficult to change but not impossible ).

So how does this help me ? I decided that I also need to stop the “learning” process at some point, otherwise data keeps changing resulting in always changing conclusions. But when to stop it ? Hard to say, it depends on the available data. If available data is enough to represent “reality” than I can confidently stop the learning process. But what does it mean “represents reality”… I’m thinking that reality is whatever sensory data is available for analysis, coupled with the environment in which the neuron evolves coupled with some evolutionary programming, objectives such as surviving. This all looks way to philosophical to be of any practical use but perhaps all is statistics underneath..

Decoding firing rates

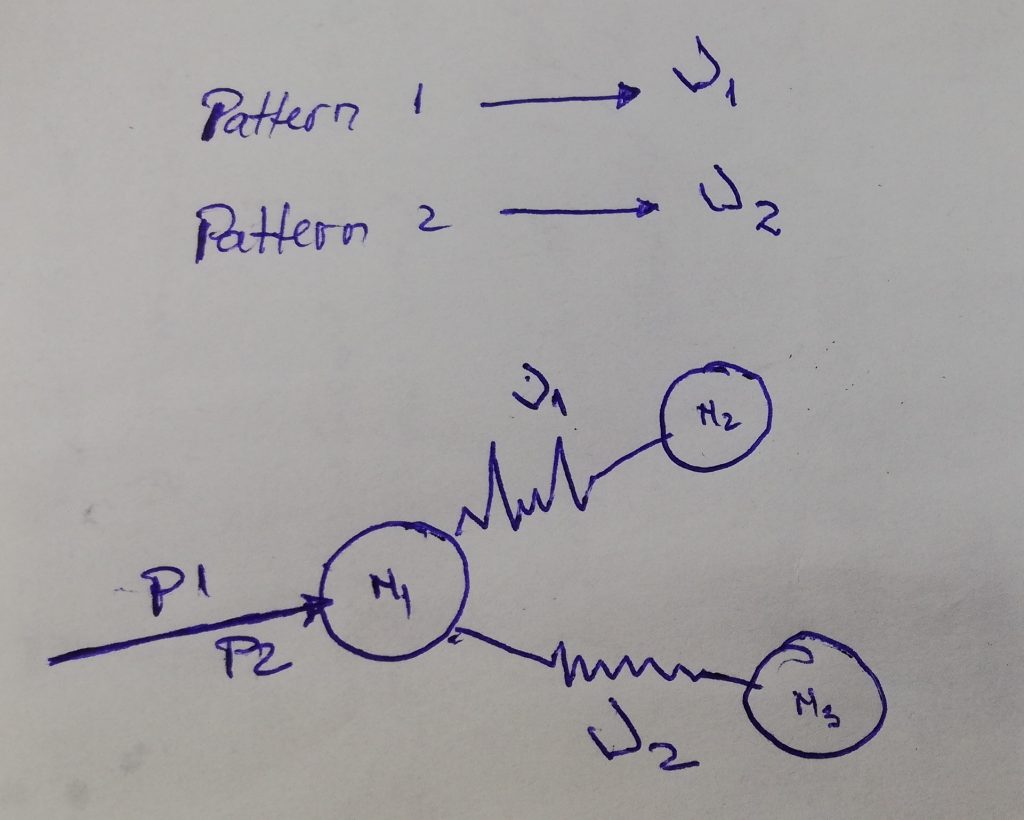

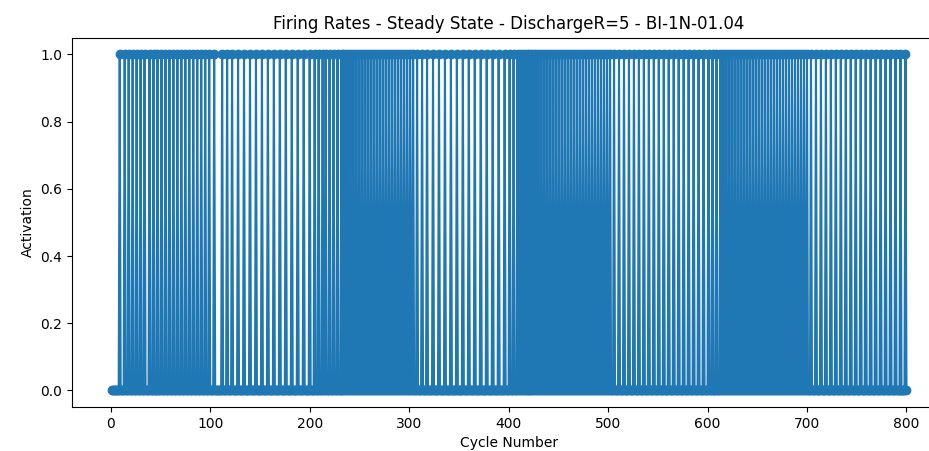

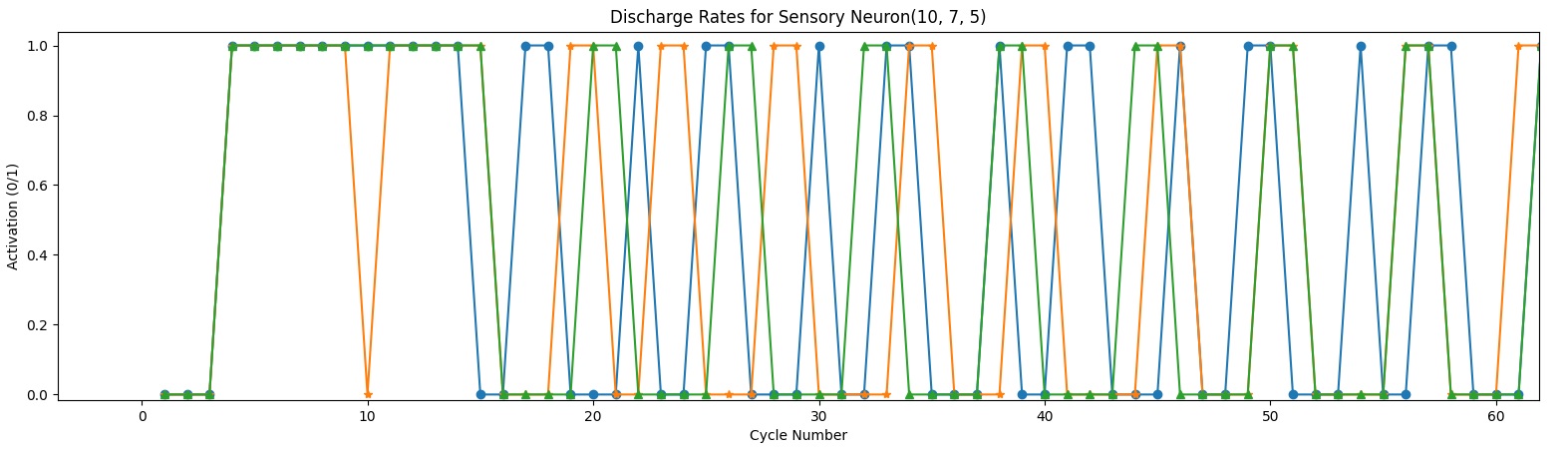

… and it did not work… I spent close to a month on this and nothing. And this was supposed to be easy. The idea was to have neurons “learn” to respond to certain frequencies and perhaps not to other. Something like this:

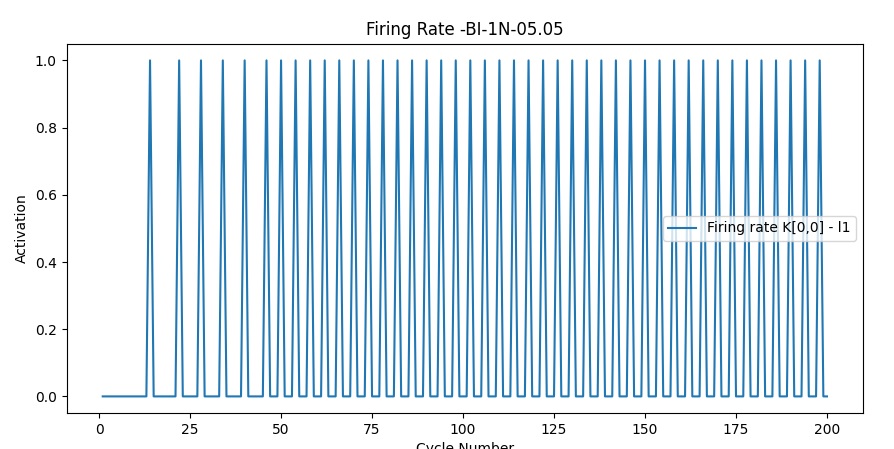

It did not work within the model I should say. Because otherwise is just training a variable to a certain value and it’s done. But within my model, I could not find a variable that would fit the purpose and that’s because no variable is truly irreversible. So the two neurons N2 and N3, initially learn pattern 1 respectively pattern 2 but then after couple of trials switching between patterns, both learn just on pattern and do not respond to the other one and if I insist with the pattern with no response then neurons will adapt and respond again to that pattern. Then I started doubting the whole idea of “learning” a certain frequency. Is the learning process for frequency at synapse level? At neuron (body) level ? Add to this the fact that Firing Rates are either faster or slower right at the beginning of a new pattern :

and there you have it, doubting everything.. I read online to see how the biological neuron adapts to changing frequencies but I found nothing of interest.. vague explanations about Potassium Channels and vague descriptions of “natural” frequencies of a neuron. So I’m giving up on the 1 Neuron applications. With a single neuron I can’t really determine the impact of learning frequencies a certain way .. Also lately I started doubting the “synaptic strength ” interpretation. While that process is real (increase of AMPA receptors in the postsynaptic neuron), I don’t think it plays a role in the learning process. It may play an indirect role such as breaking or forming new synapses but by itself is not “learning”.

What’s next ? I’m going to switch to a 2 by 2 model, see if I can learn more from that.

Adding time to the fray

I’ve resisted adding time for a long time, first because I did not see the need, and second because it seemed like a very complicated variable to deal with.. But now the time has come… it seems.

As I mentioned earlier I have in my mix of variable, an equilibrium process, driving one of these variables (the equivalent to the release of glutamate). I thought I could use cycles to drive the process but it seems it should be the other way around… This variable should drive the cycles…

Why should time be important ? It seems to me that “time” is what gives importance to events. If I don’t assign importance to events, nothing is being learned or everything is. Both options are bad… You may think learning everything is good, but is not, it’s increasing processing time and is preventing generalization, in the end nothing can store or process as much data as we encounter every day..

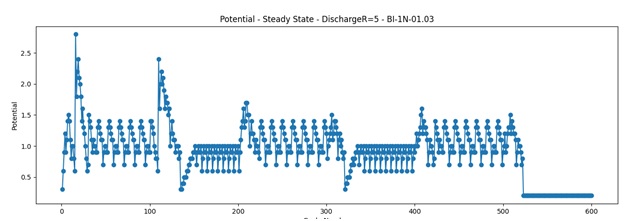

I decided to go with synaptic plasticity to synchronize neurons… First simulations look good but I’m far from reaching a conclusion. Even with the mess created by the lack of synchronization I was still able to see some encouraging results for the learning mechanism.. the fitting functions powering the neuron are doing an ok job, adjusting all synapses to learn 2 patterns, but I’m not quite there yet. When it first sees a pattern the deviation from equilibrium is big, it adjusts, then I switch patterns.. Eventually I should see no deviations from equilibrium when switching patters. What I see instead is that the deviation is decreasing but it does not go away (as I think it should).. So I still need to adjust the fitting functions some more but I was really happy to see this working as good as it did !!! Here it is, Potential vs Time (not really time but cycles for now), for 2 totally different patters (zero overlap) with a symmetric distribution on only 2 dendrites.

Also the 2 patterns can be easily distinguished observing the firing rates:

Been thinking to take a detour to show case some 1 Neuron nonsense applications 🙂

Maybe show how I can detect lines of different colors, or detect a hand written letter.. Mind you, the detection of a single letter may sound more cool than it actually is.. You change size or position … and pufff, no recognition… I’ll see… if I can do it in a single day, I’ll do it, if it takes more time… I don’t know..

Firing Rates and synchronization

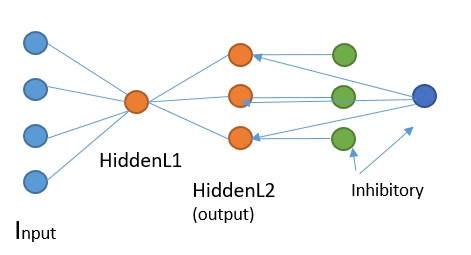

I finished updating of the code and now I can run with layers of different sizes. Now I’m not so sure I needed it, but it’s done.

My plan was to carefully graph various variables from the learning mechanism, when a single neuron receives multiple inputs with different distributions on dendrites. But again I got stuck in the firing rates and the lack of synchronization between input neurons and hidden layers neurons… I’m not sure if they should synchronize in the first place, but if they should, then I have two possible mechanism, either through the learning mechanism (synaptic plasticity) or through the inhibitory neurons.

As it is right now, the learning mechanism is activated and is stabilizing to some degree the firing rates for the hidden layer neuron, of course if I use exactly same firing rate it works without activating the learning algorithm. But I’m not happy with the fact that the neuron is always out of balance changing variables back and forth for no good reason..

I joined a meetup group on Deep Learning, went to two meetings about GNN and Transformers. I was vaguely familiar with both, but after seeing how they work in more details I realized that my algorithms are not that different from either.. well, the differences are still big but there are intriguing similarities. I believe the Transformer could be made much faster when modified with some algorithms I developed, but it’s hard to say till I understand everything better, maybe I’ll talk to the guys hosting the event.

Anyway firing rates seem to be a must for my algorithm, they allow the neuron to receive data from neighbors in order to make it’s own decision, it also allows for feedback from upper layers. Without firing rates, both events would be too late..

Now I’m reading literature because I’m undecided of how to proceed…

Long term vs short term memory

I’m still in the process of changing the code to allow for variable size for each layer. Very tedious, seems all function are affected by this change.

Anyway, I still have time to work on theoretical aspects. So I’ve been thinking, even if my algorithms are not there yet, it still allowed me to see some troubling results. It seems to me that changes made during the “learning” process are “too irreversible”. So all changes I make within the neuron are event driven, a single process is an equilibrium process which is to say that forward rate is event driven but the revers rate is time (cycle) driven. So while the changes are not irreversible, they can only be reverted by an event. And if that event is not present the change will remain.. This leads to situations where the AI will see things that are not there. I observed this process in previous versions where I mentioned that the current result depends “too much” on the previous patterns , but the context seemed different at the time. (see my previous posts). While I did not solve the synchronization issue from my previous posts, I’m now running “empty” cycles to bring neurons to an neutral state. This way I can be sure that the learning process is the one causing the ghosting and not the lack of synchronization.

So the question is now: when, where, how to store long term memory and how to retrieve it in such a way that will still make my AI see things that are not there 🙂 … as it does now… Where ? In some other deep layers (hippocampus ?), When- that is unknown, How –similar to how they are stored now, but it depends on When. How to retrieve it ? –I need another variable in my synapse definition, so at some point down the line, I’ll change that variable based on inputs from layers storing long term memory.. I think..

On a different topic, my learning mechanism is for all intents and purposes a fitting function.. Here there is also a problem… much like Pauli exclusion principle for electrons it seems that synapses cannot occupy the same space… so some “energy” values should be excluded or limited … That, I hope to clarify when the code is up and running and I can do some real simulations. But looks… complicated.. It does not seem, but is linked to the previous topic 🙂

Synaptic strengths and updates

I worked hard in the past month to implement the new theory but I made little to no progress. Every time I think I understand something and solve some problems, I find that things are much more complicated than I previously believe them to be. New complexities that I did not think of… at all.. So for the first time I’m actually pessimistic…

Synaptic strengths, in my model is defined by 3 main variables, the synapse is defined in total by 5 variables. At some point I realized that there is a play between learning new things and remembering old things and the new theory should have solved the issue. The mathematical model is limited to 2 synapses and I cannot actually predict what would really happen when the neuron is inserted into the network. But in theory should solve the issue of learning/remembering. I inserted the neuron into the network immediately because in fact the coding does not support anymore testing a single neuron by itself. But once inserted into the network, the network is at the same time too simple to test the learning mechanism, but also too complex when I add more than 4 neurons… So I need to get back to a simpler version where I can test only a single neuron but with complex patterns.

I concluded that while simple and complex cell may seem very similar in their biology, from a functional stand point, they must be very different. Simple cell select precisely some patterns while complex cells differentiate patterns through firing rates. I believe the simple cells are not that dependent on the firing rates, but in the model I’m using this behavior cannot really be ruled out.

I may have found a way to selectively group signals based on their complexity. Meaning complex signals will converge to the center of the surface, while the more simple patterns will stay on the periphery. But, at this time, I only have two areas and I can’t be sure more areas will form or how should they form. Also an area (in current simulation a 4 by 4 matrix) is somehow hard to define in a general way, right now I’m defining it as being the edge where the inhibitory neurons are overlapping, allowing for more signal but at the same time being more controlled (it activates less because is more often inhibited).

Inhibition works, somewhat … but it’s unpredictable because of the firing rates. Firing rates lead to a general unpredictability because I don’t know when to synchronize the neurons. Synchronization is possible but I don’t know the right triggering event. Sometimes they should synchronize other times they shouldn’t, because I don’t obtain the “correct” answer. Correct answer is also poorly defined.

I’ve also concluded that the network cannot be precisely corrected with specific feedback. Any feedback (back propagation equivalent in a way) has to be nonspecific. Meaning that it may lead to a desired outcome but it will also lead to unpredictable changes, secondary to the primary desired outcome, or, sometimes, the undesired outcome will be the primary outcome. This conclusion has come from trying to implement a “calling function” where the neuron will send a signal within an area, signaling the neuron is ready to accept new connections.

In conclusion I have very few good news, learning seems to be working but I can’t be very sure. Firing rates may be separating patterns but I can’t be sure of that either. Signal grouping is limited to only 2 areas where I believe it should be many areas..

What’s next ? Next I’m gonna go backwards.. I need to create a branch where I can test the learning mechanism with a single neuron, but with complex input. I’ll see from there..

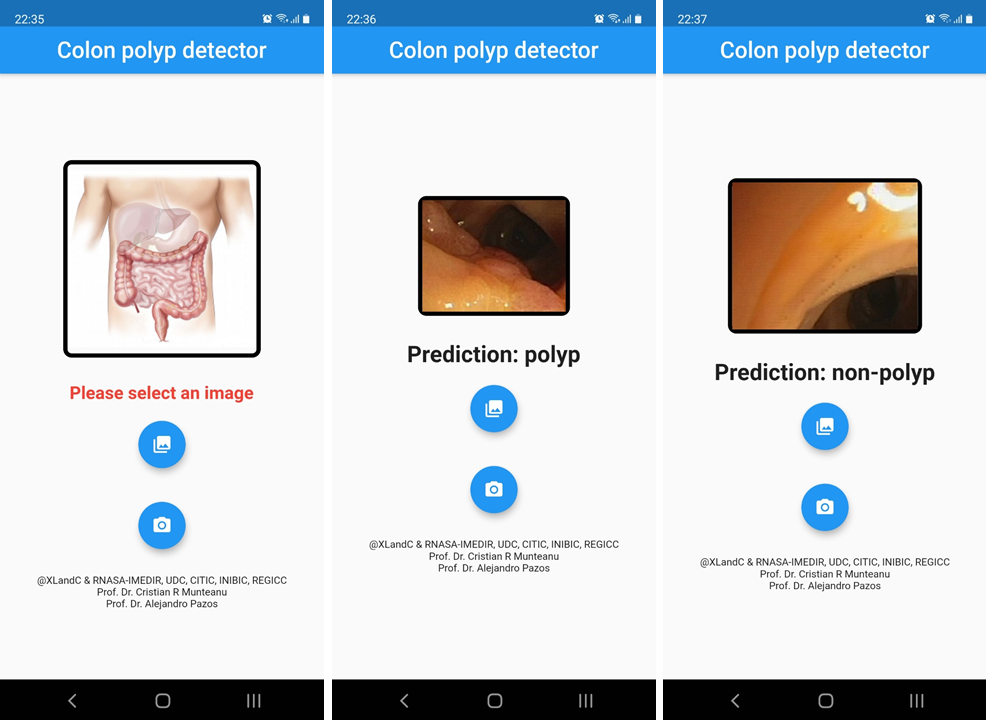

New case use for AI: Colon Polyp Detector in medical images as a free android app

While I was busy with this new AI concept our team worked on more down to earth apps using current state of the art AI algorithms.

xLandC in collaboration with RNASA-IMEDIR group at University of A Coruña (Spain) implemented a new proof-of-concept mobile tool to detect colon polyps from colonoscopy images: Polyp detector app – available at Google Play store at https://play.google.com/store/apps/details?id=com.xlandc.polypdetect. A deep learning classifier trained with a free dataset has been implemented as a tensorflow lite model into an Android free app using Flutter (all tools from Google).

The model is able to detect only colon polyps in medical images and we will improve it with future updates. It’s free of ads and no user data is stored, tracked or used in any other way by xLandC. All predictions are evaluated locally in the user’s device and the AI model is also locally stored. There is no central server. With each update we are able to change the model with a better one and to improve the functionality of the application.

Just browse for a picture from your colonoscopy image and make your prediction. In addition, the camera could be used to take a picture.

More failures, but a new learning theory

While I was focusing on fixing the synchronization issue, I lost sight of another serious issue. Once I introduced the semantics of dendrites, I lost the learning mechanism.. Not only that but I also lost the inhibition mechanism.. Inhibition could have been fixed somehow, but I realized that without the current mechanism of inhibition is not possible to synchronize neuronal activity. Maybe I need 2 inhibitions to get back to the previous state.

Anyway when synchronization was somehow fixed, I realized that there was no learning anymore. Of course I did not think through the changes I introduced with the semantics of dendrites… I read more neuroscience articles watched some online lectures.. got disappointed on the lack of clarity but eventually I came up with a new theory for neuronal learning inspired by what I learned. I went and ran multiple simulation scenarios on an Excel sheets and it seems to work.

The new theory is unfortunately much more complicated, meaning many things could go wrong but it has some clear advantages and is much more in line with what’s known (or assumed) in biology:

- learning now integrates firing rates (which I despise because it makes understanding more difficult)

- multiple synapses on multiple dendrites can activate now to generate an activation potential.

- there is a new mechanism for “dendritic growth”, which is to say that I now have a rule, based on activity, for when a dendrite can accept connections. The model does not tell me when to seek new connections though..

The drawback ? Firing rates… I still use the concept as defined in my previous post so I’m not using time but cycles to calculate a firing rate. I’m still hoping that I won’t have to use actual time for firing rates. Also there are still many unknowns, LTD is not so clear anymore, so I may still end up with yet another failure. In terms of coding, it should not be difficult to implement, but it will take some time to understand if something is “right” or “wrong”.