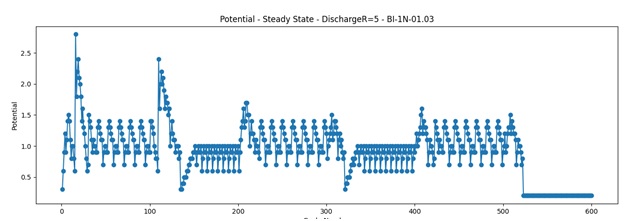

I’ve been getting many unexpected results in my quest for invariance. I thought is because of a bug in coding or a bad theory. But no, everything seems to be in order, there are no errors that I’m aware of.. So I was left with the improbable.. I don’t get identical results for identical patterns, because “similar” is not what I assumed it to be. Something is similar (or identical) not only when is formed from identical components but it also needs to have the same history. When I was thinking of “context” I was usually thinking only about the “stuff” around, did not think that I need the whole history behind that event (history in context is : sequence of patterns in time).

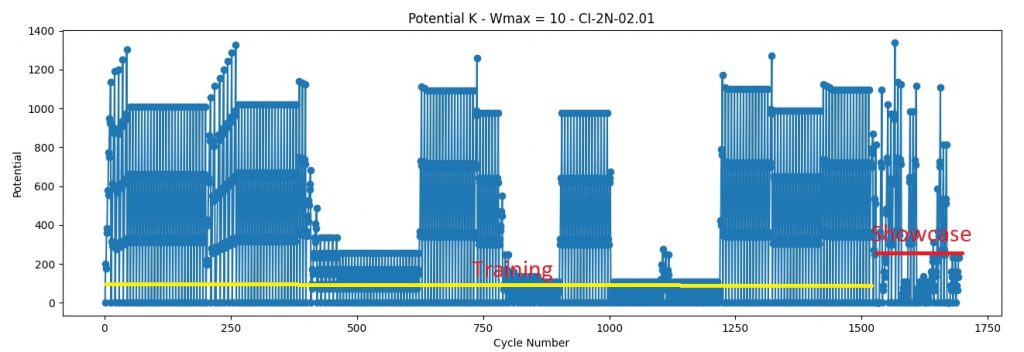

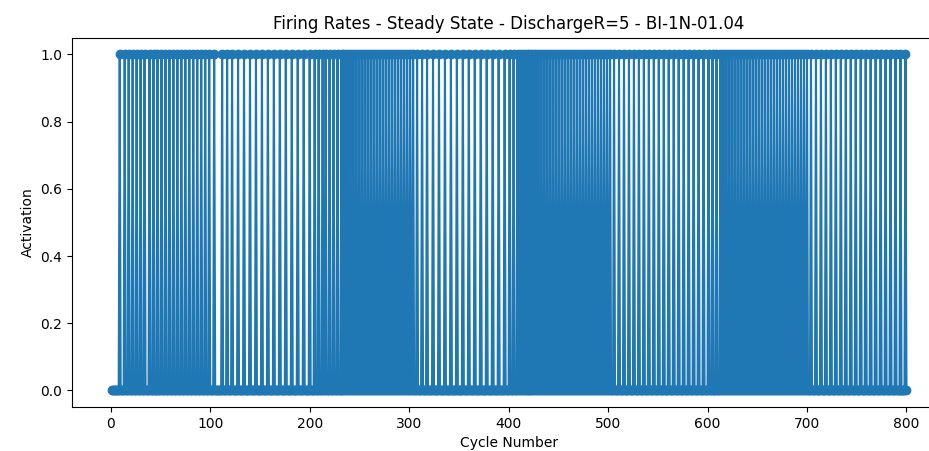

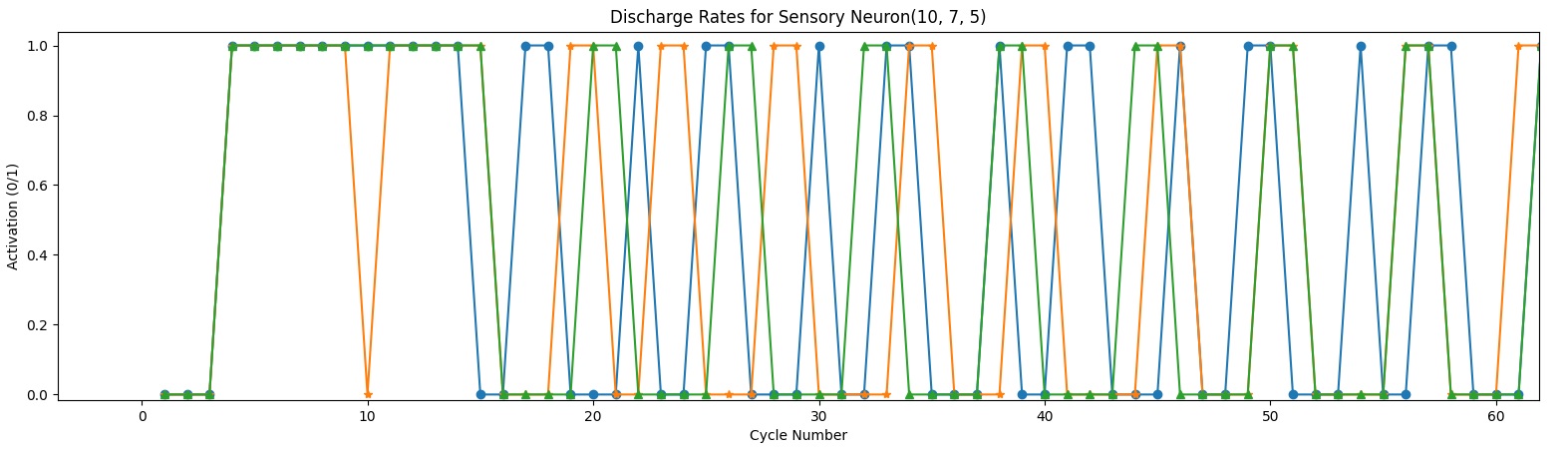

In the meantime I have some explanations for the lack of synchronization I now encounter on a regular basis.

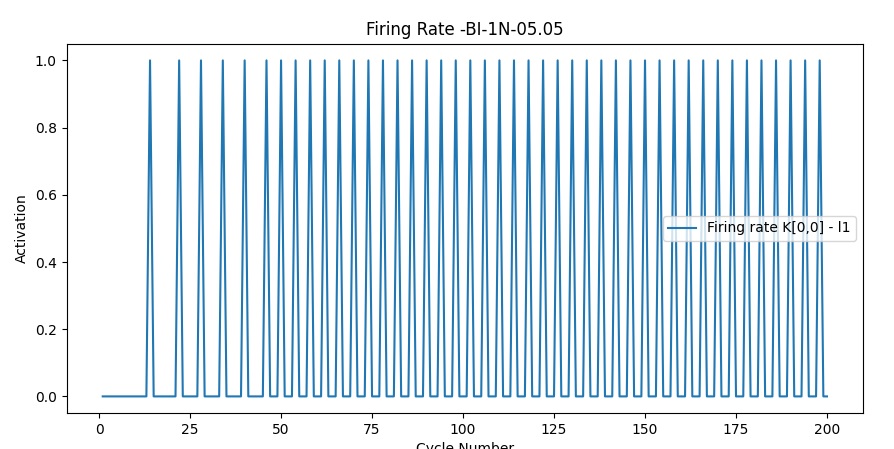

- I don’t have horizontal cells or amacrine cells, in my code this results in an out of phase state that cannot be corrected (my input cells don’t fire every frame but on a certain frequency, set every other frame at this point in time). I have a button that brings them all to frame 1 when this happens, but this is just a cheap easy fix.

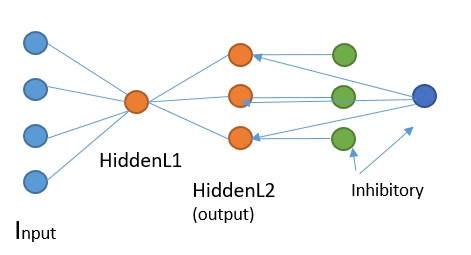

- The input cells are not out of phase, but patterns within the same visual field, fire at different frequencies. Not sure what to do about this one, could be normal and be somewhat “fixed” within the next layer. I was thinking to link inhibitory neurons among themselves so if one is activated it it will inhibit the inhibitory neurons around( meaning it will inhibit the inhibition they were providing)