Something is missing still, but I can’t put my finger on it… There is no clear way to decide when a pattern is learned… In the absence of a clear criterion, that would stop the learning and preserve the variables, that patterns is getting broken down into smaller parts until there is a single pre-synaptic for a single post-synaptic neuron… and even that last link breaks, resulting in no connection in between layers… I believe I’ve mentioned this problem more then a year ago and now I’ve gotten full circle back to the same problem… The equations for synapses are different, the conditions for initiation or breaking connections are different… yet this problem remains… I’ve looked back on my notebooks and I found no notes commenting on this problem. It seems I was not sure this was a real problem… No solution seems obvious at this point. Stopping the learning process at an arbitrary time has many drawbacks, but I see no other way of going forward…

make it or break it – take 2

Yup, that time has come. No more wiggle room. This should be the first step in what I want to build … a new breed of AI. This first step should show how learning is accomplished in such a system. Signals should be classified and recognized as the same when seen again. The first show case I want to build is 2 color recognition on a 3 layer network: L1 2×2, L2 5×5 and L3 2×2 with a single inhibitory neuron on layers 2 and 3. I’m also considering showing angle recognition on a 3×3, 3×3, 1 network configuration.

I did not add color vision but I added a simpler simulation where different “pigments” (as in cone cell pigments) would result in different firing rates / neuron.

This could take days or months or maybe it won’t work at all.

make it or break it time

I’m nearly there, about two more weeks to get there. I worked a lot, but mostly doing simulations. The problems more or less remained the same:

- inter-layer transmission of signal. Because activation frequency decreases with each layer, in layer 3 I already get to the point where the frequency is too low to activate neurons and synapses are classified as low frequency and removed. Increasing some sort of synaptic strength did not work because that increase is limited, it can’t be something arbitrary. Same layer connections should work as an amplifier, but they suffer from bad timing. There are mechanism that should synchronize the firing events but they too fail more often than not. I still have hopes to improve on this though. Another mechanism could be a persistent reinforcing feedback … Every 3 layers or so the third layer should feedback on the first layer, increasing the frequency for both, it seems far fetched but I’m close to implementing this option too.

- Inhibition – I’m still looking for the proper level of inhibition. How many synapses should act together to overcome inhibition (meaning in spite of inhibition being present and limiting synaptic potential, the neuron should still fire by adding small but many synaptic signals). For now I believe that this should be the desired mechanism, rather than “all or nothing” mechanism I had so far. The all or nothing is still present for fewer activating synapses. But the number of synapses needed to cooperate is still unclear .. it has to be related to some minimum frequency..

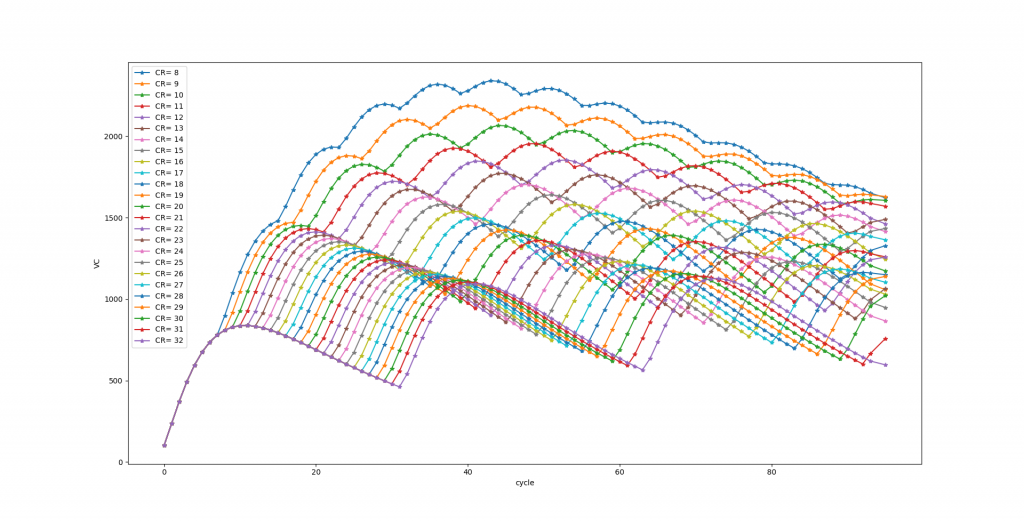

- Synaptic frequency – a single synapse seems to very limited in the values it can take.. In theory I could have around 100 values, but in practical terms that number is even lower .. around 20 values.. Messing with this number is tricky … There is a hard theoretical limit for the highest and lowest values, but if I increase the value, I also increase the computation time and limit the inter-layer transmission. I believe I can find a reasonable compromise, but right now I feel severely limited by this problem..

- Synaptic connections – has now become the most pressing issue and the most complicated.. Making and breaking synapses on large matrices is slow an requires me to keep track of every connection. A single neuron can connect to a lower layer same layer and upper layer + to a Inhibitory layer .. at the same time. So far I used a simplification, once removed a synapse would not form again. But that was putting serious pressure on the mechanism for timing the connections, meaning a connection should be the best connection possible from the first time. That is not working right. I tried to wait long enough for all connections to form in the first layer and only after that bind to the second layer.. There are too many cases to consider. So now I let connections form and break forever. But keeping track of all of them is difficult. I still wonder how two neurons close by, stop forming an infinite amount of connections among them. They form many, to be sure but not a fixed or an infinite number.. So when does the process stop.. why ? If I don’t keep track of every synapse, two neurons would keep forming synapses among themselves..

- Training patterns – I thought they could be everything.. I’m not so sure anymore. Signals received from the retina are heterogeneous because there are 3 types of pigments for the cone cells and 2 types of bipolar cells.. This results in a complex pattern even when you deal with a very uniform input pattern (say watching a white piece of paper ). Having a heterogeneous signal works while a homogeneous signal leads to “bad timing” .. close by neurons receiving exactly the same signal cannot form connections among themselves. I have a work around for this but I don’t like it because what I see as input is not the real input (the white sheet of paper, say), I need to imagine the input… that makes understanding very difficult.

- Small input going into a bigger second layer.. I’ve procrastinated on this. I can create layers of different dimensions but neurons don’t bind with some sort of step. I need to have neurons that don’t get direct connections from the lower layer, or even if they still bind I need to have more neurons in the second layer to act as signal amplifier ..

Inhibition

I implemented more sophisticated mechanisms for inhibition… Now an inhibitory neuron is identical in behavior with a regular neuron.

So I implemented 2 mechanism, 1) the inhibition would be at the neuron body and 2) the inhibition acts directly on synapses. None of them makes any sense.. When inhibiting the body (1), synapses are not protected by LTD/LTP effect => the net effect is a decrease in the firing rate and there is no permanent inhibition for any patters.. When inhibition hits, couple of activation cycles are skipped so you have patches of decreased frequency of firing alternating with no firing. When inhibition is at synapse level, I end up only with skipped activation cycles, the firing frequency remains the same since synapses are protected by LTP/LDP effects..

In both cases I see no use for that delay in activation, because is not a single patterns that is delayed, all of them are. The separation between patterns is not great (couple of cycles apart), the problem comes also from inhibitory firing with very low frequency. Their activation depends on the activatory neurons, which have decreased activation themselves as you go up in the layer number.

I sort of defined my “objective” function for a synapse:

I also defined LTD and LTP, I initially believed they are just the fitting event for the objective function, but it seems they have multiple roles .

I made a lot of progress but still no “significant” progress..

Long term depression / potentiation

I use these terms very loosely to mean the increase (LTP) or decrease (LTD) of potential delivered by a synapse to the neuron body. I used some forms of LTP/LTD in my previous versions but they were never meant to approach the biological equivalents. Now, I spent some time to read what is known in biology about LTP and LTD and while there are tones of papers on the subject, I could not find anything that has explored the need for such mechanisms.. They are used in “leaning”, that’s a very vague statement.. I’ve been thinking and I cannot find a use case for them. I found a use for an LTD event during depolarization of postsynaptic neuron, but no uses for LTD/P associated with low/high frequency inputs.. What is low/high frequency ? They seem arbitrary to me. I can use whatever frequency in my code, I should be able to link these terms with something… but to what ?

However, I believe the main reason for having an altered synaptic potential is to change the firing frequency of the postsynaptic neuron… This conclusion troubles me, firing rate is crucial in selecting / separating events (inputs), any alteration (or missing alteration) can be picked up by the inhibitory neuron and amplified, resulting in vastly different results even when the initial change in synaptic output was extremely small. So if I don’t add them now and don’t understand them, they may come back to haunt me…. yet, I don’t need them..

My plan is to explore the implication of LTP and LTD on various other variables but with no certain goal in mind, I find that both boring and difficult.. Is there a paper showing they are actually “long” term changes ? I found a paper saying that very few last up to a week and most alterations vanish within hours. I don’t consider that, long term…

Does LTP/LTD stop ever ? with age perhaps ? for certain layers maybe ? Do they become less frequent ? So many questions… Given my difficulties in transporting signal through layers is still feasible that some LTP/D events would be extremely difficult to change in deeper layers, so they in the end could be viewed as long term and part of the learning mechanism.. So they are long term because they are hard to change… speculations …

Inter-layer transmission

If neuron in layer 1 (L1), requires 10 inputs to fire, and those 10 inputs are delivered in 10 cycles, another neuron in layer 2, requiring also 10 inputs for activation, is activated in 100 cycles by the neuron in L1… In third layer, the cycles required for activation is 1000… So this cannot work like this.

I was aware of this issue since the beginning but I have hoped I can solve it by increasing synaptic efficiency so basically neuron from L2 would require not 10 inputs from L1, but say just 1… That would have been acceptable…The problem with this approach became apparent very late, by increasing synapse efficiency, the selectivity of the post-synaptic neuron decreases. So the solution I envisioned proved to be a dead end. Now I’m considering other approaches to deal with this slow transmission from layer to layer..

- Would be to have multiple synapses between Neuron from L1 and neuron from L2. This does not look very promising from various reasons, but maybe in combination with other ideas, could work… not necessarily make 10 synapse, but even 2 synapses would reduce significantly the delay.

- have much more neurons in L2 then in L1. And those extra neurons would serve as some sort of amplifier .. would bind among themselves, and excite each other in a bizarre loop. I have played with such loops in the past but they resulted in continuous excitation. Maybe they could be used to store more patterns too… I was planning to add more neurons in L2 anyway, so I’m more inclined to start with this approach.

- accept a serious reduction of signal in L2… Basically 10 neurons from L1 could link to a single neuron in L2, and that neuron would fire immediately after the 10 neurons from L1 fired because it receives 10 inputs. This could be part of the solution, but I don’t see this as acceptable (this is what is happening right now by default, when there are multiple binding from L1 to L2)

- Something else that is unknown now…

I’m also not happy with the inhibitory neurons… By acting fast (require just 1 input to go active) and being 100% efficient, removes some of the learning rules I have envisioned.. They are not in my immediate focus but they are bothering me..

The new synapse kinetics work extremely well, beyond my expectations.

Adding time #2

Seems by adding kinetics to synapses I added also time to the algorithm. But time has always been an elusive variable. Time is the rate of change for some events. So time is not really correlated with the outside arbitrary unit of time and will depend on the computer power. It is very possible to correlate this internal time to the outside time but for now it will serve no purpose. However time is now embedded in multiple processes. What can be learned is now indirectly linked to time. The time component will determine what is correlated and what is “important”. Time also seems to determine how many patterns can a synapse learn without internal changes. Slower kinetics would allow for more patterns being learned.. In a way would increase precision or selectivity. Increase precision requires more processing cycles.

On the update side.

I implemented the new kinetics at synapse level but I need some sort of kinetics at neuronal level. Without it I cannot decide when a neuron was active. Inhibitory neurons still work on the old simpler mechanism so inhibition is instantaneous and inhibits 100%. I may have to change that in the future.

Dendritic Growth 3

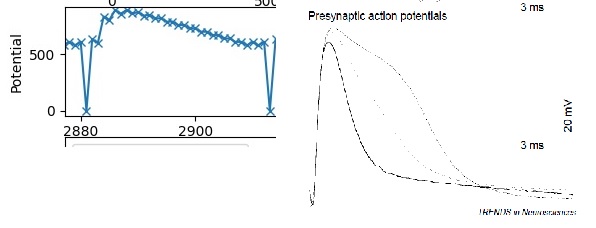

I have programmed in the first step, linking synapses on multiple directional dendrite, but no branching yet. However, when running this model I discovered that my kinetics for synapse potential, don’t work well, they were good enough for previous model, but in essence a kluge job that worked for the wrong reasons. Basically I’m not converging well to the firing potential of the neuron, I’m overshooting and the correction, which is not good either, messes up the timing of the firing event. The result is devious and cascades into the following layers resulting eventually into a wrong learning pattern. I added ordinary differential equations, but it did not help, the problem comes from, dP/dC, where P = potential and C is the cycle number … The cycle number is an integer and I can’t do anything about it => the convergence is still poor => W (AMPA receptors) fluctuates from pattern to pattern => a delay in forming a stable pattern in the next layer => that patterns is completely inhibited if it competes with another pattern

I don’t want to reproduce real biological data in my simulation, but when I get stuck I look for inspiration in real data :). For now I’m only interested in the upward trend but I still wonder why the downward side looks so …. not symmetric .. Why does it take so long to go back to the initial state ? How long does it take though ? Can a neighboring neuron fire twice in the amount of time it takes for this synapse to regenerate ? Can this neuron fire again while this particular synapse is regenerating ?

I extracted many equations from the code hoping to solve them mathematically… but no luck there either, I don’t know how to solve so many linked simple equations.. but maybe someone does ..

Dendritic Growth 2

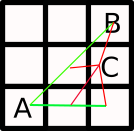

As usual, this is much more complicated than I thought. I can’t really decide when to branch, how much to branch and how long to grow a dendrite… Look at the picture bellow, I want to connect A with C, but there is no dendrite growing directly on that direction, so it has to branch to reach C.. But branching can be done from various points as shown in RED. A random branching, from whatever point on the GREEN lines (default dendrites) is out of the question.. The branching has to be done from precise points, where the dendrite connected with a different neuron, so I’m left with 4 branching points… Should I link C with 4 synapses from A’s dendrites? Just one ? What if that breaks ?

I don’t have enough information to decide on a course of action so I’m left with trial and error, like I did for the synapses kinetics (about 30 models that did not work).. So far I didn’t do much, coding wise, I only change the code to accept directionality for dendrites and decided to go with 8 default directions, basically 8 dendrites that forms only when they are needed, but unless the neuron is at the edge of the matrix, all 8 are needed .. Also I decided to remove vacancies (location previously occupied by a synapse) from available places for new synaptic binding. That place will remain empty, presumably separating two patterns…

Dendrite Growth

So far I worked with a simplified model with a single dendrite. But moving the signal to layer two with more inter-neuronal connections allowed, it led to obvious errors. Why is this important ? In my model distance matter, synapses further away from the neuronal body contribute less to the overall neuronal potential. The dendrites, with their growth, provide that variable distance. I could not find a model in literature to fit my requirements so I came up with two very different models, eventually I decided to start with the one that seems easier because it does not require any calculations (as in geometrical calculations). This model should lead to structures close enough to what is observed in literature, but regardless if it’s close or not, it should clearly link synapses based on distance. It will allow for branching, this is very important since branching allow for synapses with same distance to the neuronal body.

However there are still many questions unanswered. What happens when a synapse is removed from a dendrite ? Does it bind to a further away position ? It is removed forever ? It binds to a different dendrite ? Should I allow multiple synapses in between 2 neurons ? What happens with the vacancy left by the removed synapse ? remains empty ? is occupied by other synapses ? a further away synapse takes its place ? What should I do about far away synapse (away from the neuron), their contributions to the overall potential is insignificant even with a linear decrease in contribution with distance, I now have an exponential decrease so it’s even worse.. Sure in some cases the synaptic strength (AMPA receptors equivalent) increase and the contribution is a bit bigger, but still small. Whys so many direct connections with so small contributions ? The signal would still reach a target neuron through its neighbors, more like in a GNN network .. that would make more sense to me.

As far as I can tell, I now have a good model for :

- Glutamatergic synapse (kinetics of glutmate and of AMPA)

- GABA synapses (half baked.. is acting on the axon resulting in 100% percent inhibition, but is also affecting active synapses.. so it’s a half man half bear kind of a situation.. maybe half pig as well)