The firing rate of biological neurons is limited by the refractory period, a brief period after activation during which a second activation is not possible.

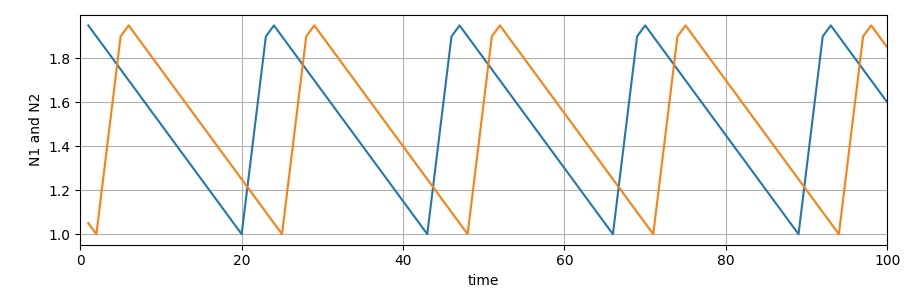

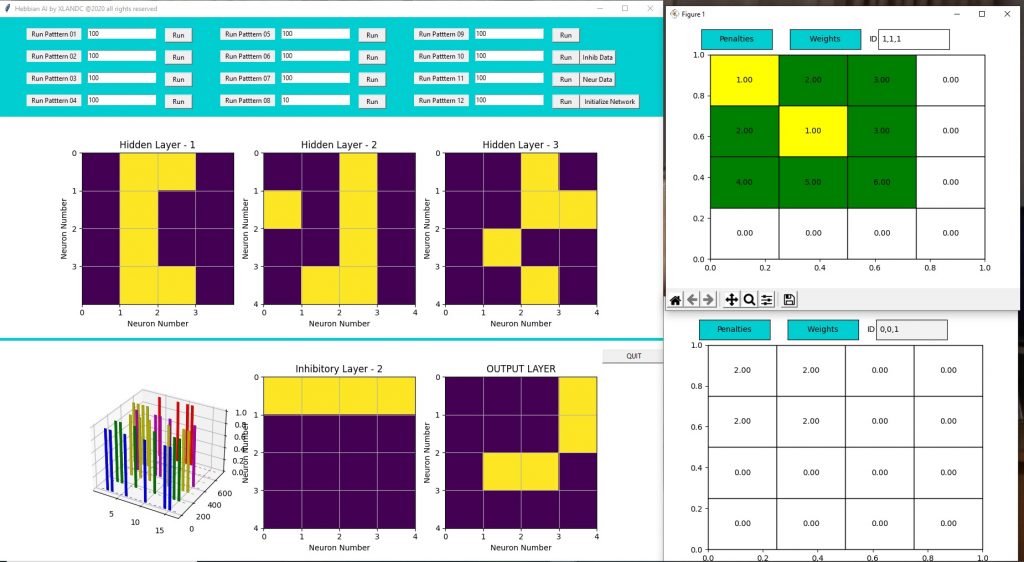

I managed to sync neurons firing within the same pattern… But that does not seem to be enough… Neighboring neurons, not part of the current pattern, remain in an unknown state. That is problematic because the current pattern can activate also close enough neurons that happened to be in a close to activation state… Inhibitory neurons cannot inhibit neurons that activate at at the same time.. So I figured I need a refractory period… after a firing event, synaptic strength should be decreased to a defined level. This in turn would create chaos within the next layer, because some cycles would bring zero signal while the previous layers is in a refractory cycle.. Maybe next layer should work on a different frequency … should update slower… Well, I’m sure there are other problems that will show up and I don’t like having to deal with the firing rate, that would add additional complexity making things even harder to understand.

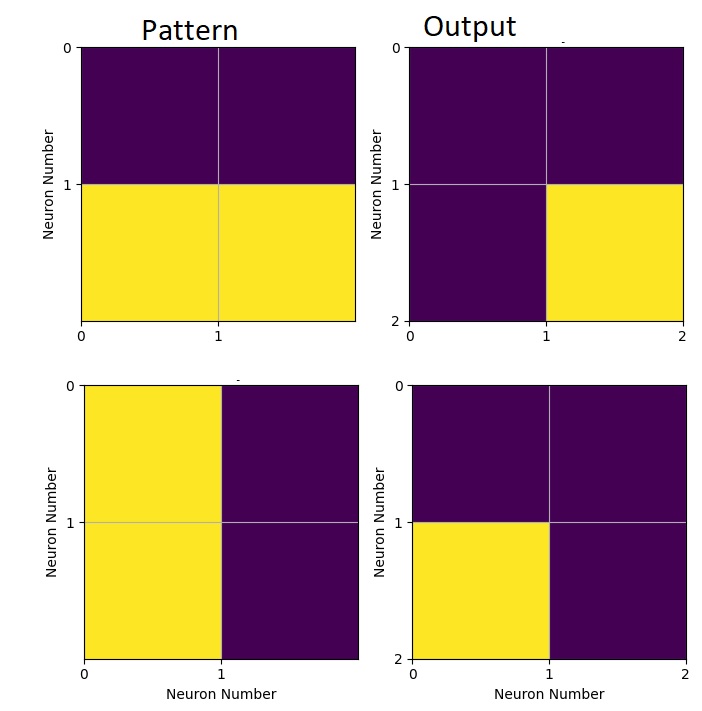

Am I sure that this is the desired behavior ? Not at all, but unless I have this working perfect, I cannot confirm or discard the hypothesis.. Right now, I’m not getting stable patterns (good or bad). The response depends on the previous pattern to some extent. If previous pattern was far, I get a (stable) response, if it was close, I get a different response.