I’m still in the process of changing the code to allow for variable size for each layer. Very tedious, seems all function are affected by this change.

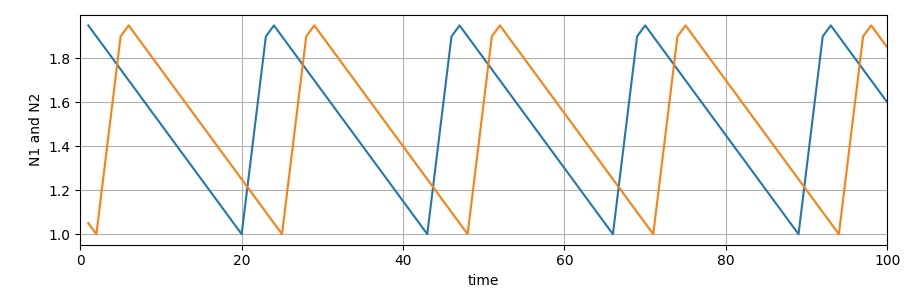

Anyway, I still have time to work on theoretical aspects. So I’ve been thinking, even if my algorithms are not there yet, it still allowed me to see some troubling results. It seems to me that changes made during the “learning” process are “too irreversible”. So all changes I make within the neuron are event driven, a single process is an equilibrium process which is to say that forward rate is event driven but the revers rate is time (cycle) driven. So while the changes are not irreversible, they can only be reverted by an event. And if that event is not present the change will remain.. This leads to situations where the AI will see things that are not there. I observed this process in previous versions where I mentioned that the current result depends “too much” on the previous patterns , but the context seemed different at the time. (see my previous posts). While I did not solve the synchronization issue from my previous posts, I’m now running “empty” cycles to bring neurons to an neutral state. This way I can be sure that the learning process is the one causing the ghosting and not the lack of synchronization.

So the question is now: when, where, how to store long term memory and how to retrieve it in such a way that will still make my AI see things that are not there 🙂 … as it does now… Where ? In some other deep layers (hippocampus ?), When- that is unknown, How –similar to how they are stored now, but it depends on When. How to retrieve it ? –I need another variable in my synapse definition, so at some point down the line, I’ll change that variable based on inputs from layers storing long term memory.. I think..

On a different topic, my learning mechanism is for all intents and purposes a fitting function.. Here there is also a problem… much like Pauli exclusion principle for electrons it seems that synapses cannot occupy the same space… so some “energy” values should be excluded or limited … That, I hope to clarify when the code is up and running and I can do some real simulations. But looks… complicated.. It does not seem, but is linked to the previous topic 🙂