I finished updating of the code and now I can run with layers of different sizes. Now I’m not so sure I needed it, but it’s done.

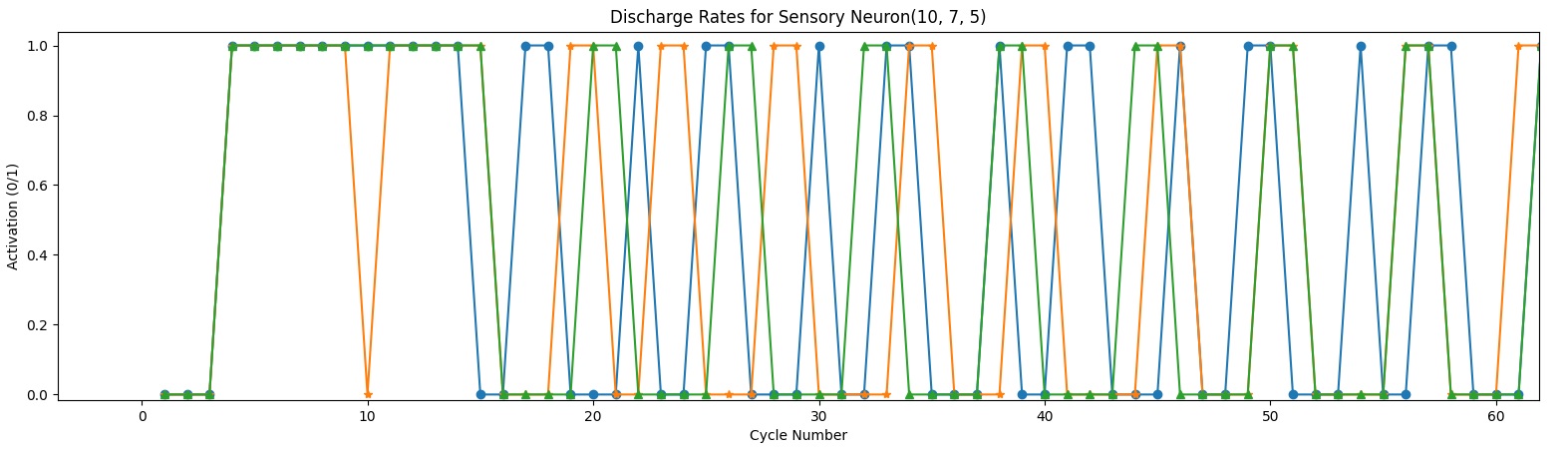

My plan was to carefully graph various variables from the learning mechanism, when a single neuron receives multiple inputs with different distributions on dendrites. But again I got stuck in the firing rates and the lack of synchronization between input neurons and hidden layers neurons… I’m not sure if they should synchronize in the first place, but if they should, then I have two possible mechanism, either through the learning mechanism (synaptic plasticity) or through the inhibitory neurons.

As it is right now, the learning mechanism is activated and is stabilizing to some degree the firing rates for the hidden layer neuron, of course if I use exactly same firing rate it works without activating the learning algorithm. But I’m not happy with the fact that the neuron is always out of balance changing variables back and forth for no good reason..

I joined a meetup group on Deep Learning, went to two meetings about GNN and Transformers. I was vaguely familiar with both, but after seeing how they work in more details I realized that my algorithms are not that different from either.. well, the differences are still big but there are intriguing similarities. I believe the Transformer could be made much faster when modified with some algorithms I developed, but it’s hard to say till I understand everything better, maybe I’ll talk to the guys hosting the event.

Anyway firing rates seem to be a must for my algorithm, they allow the neuron to receive data from neighbors in order to make it’s own decision, it also allows for feedback from upper layers. Without firing rates, both events would be too late..

Now I’m reading literature because I’m undecided of how to proceed…