I’m defining firing rate as:

Cycles needed by a single presyanptic neuron to activate a postsynaptic neuron,

Assume a single presynaptic neuron could activate a single postsynaptic neuron. If the presynaptic neuron has to activate 3 times before it can activate the postysnaptic neuron then the firing rate is 1/3. The firing rate is then a function of how much potential can a single synapse bring per activation. So close (proximal) synapses to the neuron body would generate higher firing rates than distal synapses. So the firing rate is not entirely depended on the firing rate of the presynapstic neuron.

Another definition is for “semantics of dendrites” .

The semantics describes the relationship between two dendrites. If we define a Dendrite a set of synapses (with values ON/OFF) , then the semantic between dendrite A and B will be the percentage of synapses from dendrite B being ON, while dendrite A is generating an activation potential.. Still working on this definition.

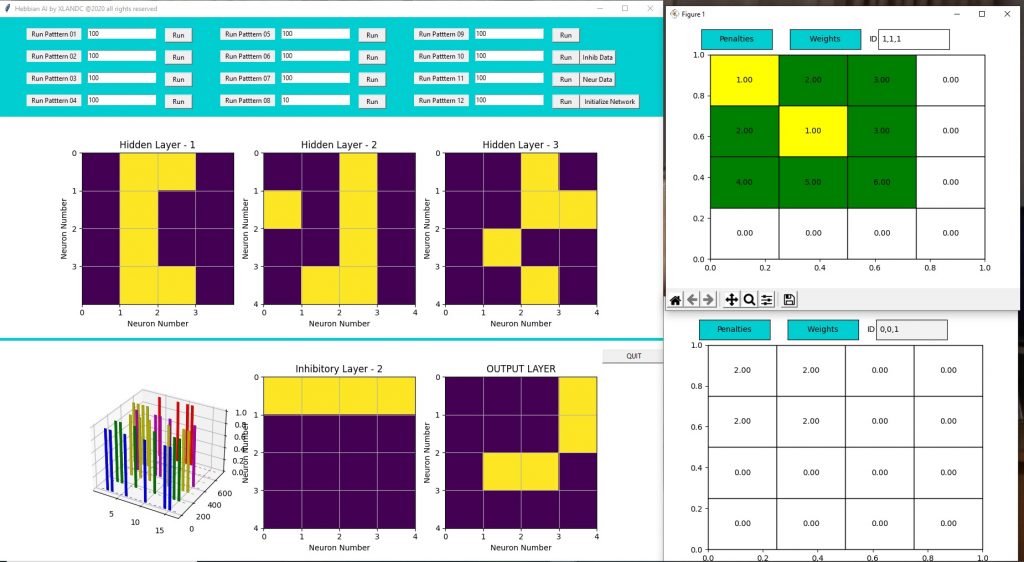

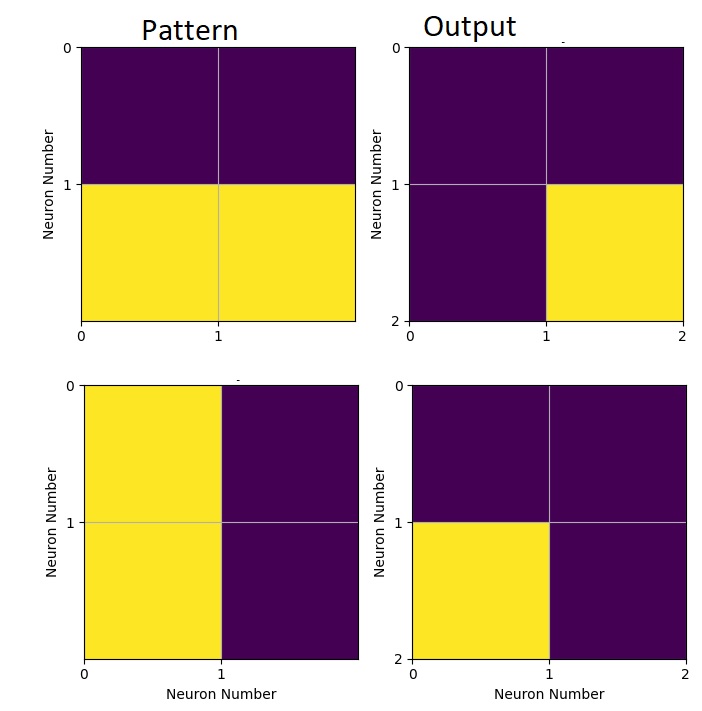

I hit a dead end with the current development branch. I calculated the theoretical dendrites and then tried to actually obtain all of them within my simulation by doing a precise training. It did not take long to realize that most of the dendrites could not actually form because they were being blocked on way or another. So I decided to switch to a more advance model in which I included the semantics of dendrites. Till now dendrites were independent. The model is actually what I started with but was too complex to handle and it had a theoretical drawback, the AI would go “blind”. I implemented most of the changes and indeed the blindness problem is there and I don’t have yet a solution. What do I mean by blindness ? Is some sort of over-training which leads to synapses being definitively removed from neurons.

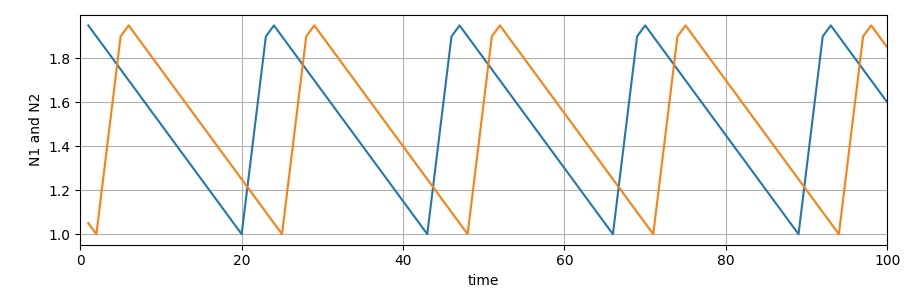

But more concerning than blindness is still neuronal synchronization. I also implemented a form of “firing rates”. But that immediately added a more chaotic behavior. Running same pattern would end up with different responses on different cycles, first would show up most probable response then the neurons will go through a cooling cycle and the next most probable response (if any possible), would show up…